PREMIUM SAMPLE

Premium members get two exclusive deep dives weekly:

Mondays: ready-to-use business prompts

Thursdays: real-world AI case studies.

When Harvard Business School faced 3,112 questions from 250 MBA students in 14 weeks, they didn't hire more professors—they revolutionized mentoring with AI. Their challenge wasn't unique: every knowledge-intensive organization faces the same wall where expertise doesn't scale and key people become bottlenecks.

"It's not about replacing experts—it's about amplifying their impact," explains Professor Jeffrey Bussgang. "We needed to transform how knowledge flows through our organization."

Their solution, ChatLTV, became Harvard's way to scale expertise without sacrificing quality. The results spoke volumes:

One query answered every 42 minutes

70% reduction in administrative emails*

Real-time insight into student learning patterns

99% private usage rate

The Execution Playbook: Your 4-Week Implementation Guide

Building an AI mentor for future business leaders isn't like launching a chatbot. Harvard needed a system that could handle everything from late-night strategy questions to complex market analyses—without compromising academic standards. Their four-week journey from concept to reality reveals a playbook any knowledge-intensive organization can follow.

Week 1:…

Take Stock of Your Knowledge

Start by collecting everything your team knows. Pull together your process documents, training materials, and past team discussions.

Look for patterns in what your team asks most often. Find the top five questions that keep coming up in emails and meetings.

Write down who needs access to what information. This helps you set the right security guardrails from the start.

The difference between a good AI system and a great one often comes down to its foundation. Harvard's architecture wasn't just about answering questions—it was about creating an institutional memory that grows smarter with every interaction. Here's how they built a system that thinks like a professor but scales like software.

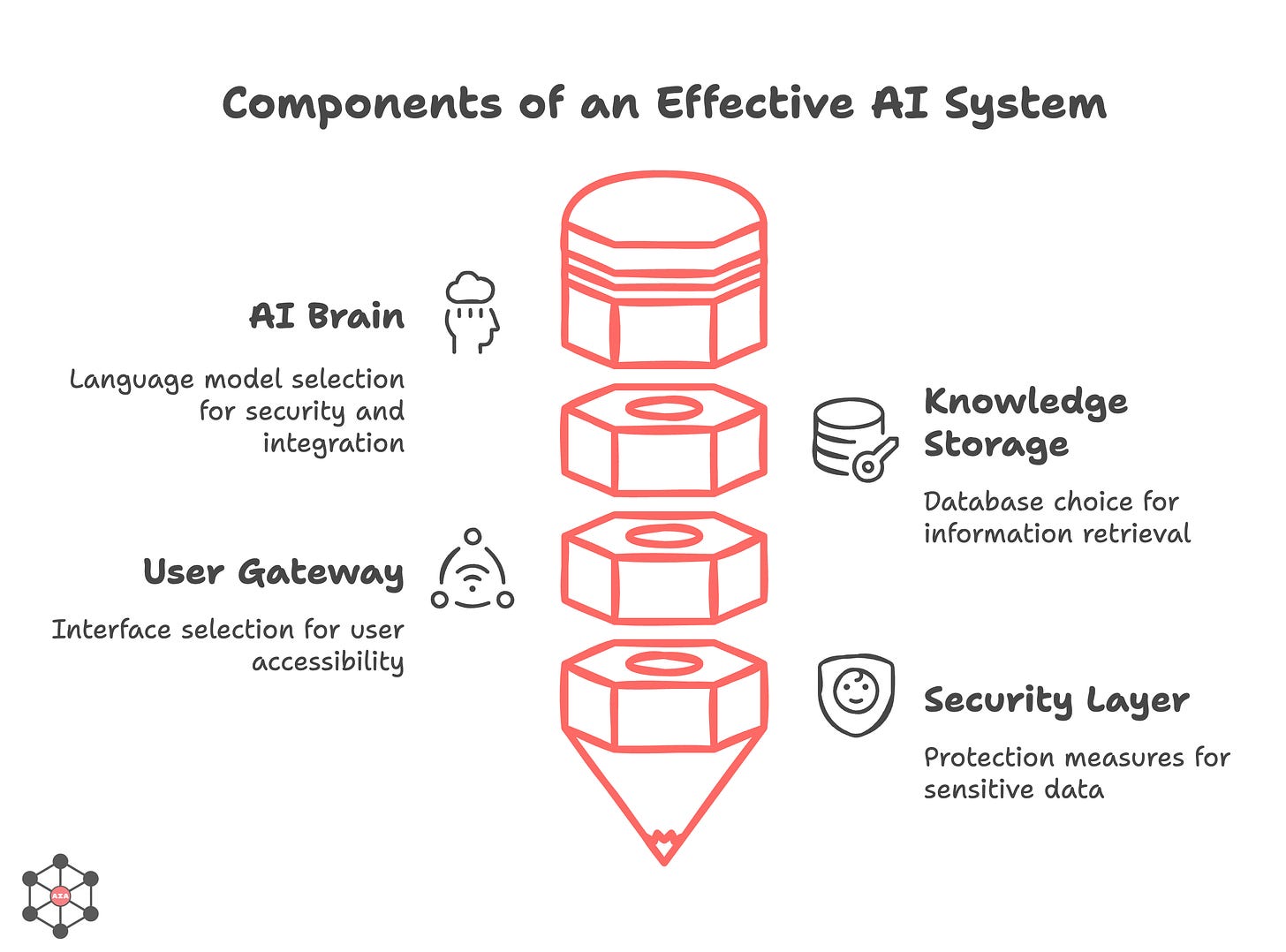

Week 2: Technical Architecture

Your AI system needs four key pieces to work effectively. Here's what each does and how to choose:

The AI Brain (Language Model)

Harvard chose Azure OpenAI because it offered enterprise-grade security and kept their data private. You can pick:

Azure OpenAI: Best for companies already using Microsoft tools

AWS Bedrock: Ideal if you're already on Amazon’s cloud

The Knowledge Storage (Vector Database)

Think of this as your AI’s long-term memory. Harvard used Pinecone to store and quickly find relevant information. Your options:

Pinecone: Easy to start with, works well for most companies

Weaviate: More flexible but requires more technical expertise

The User Gateway (Interface)

Harvard connected their AI through Slack because students already used it daily. Consider:

Slack: Great for teams that live in Slack

Microsoft Teams: Natural choice for Microsoft-heavy organizations

Custom Portal: Best when you need special features or branding

The Security Layer

Harvard protected sensitive content by only sharing small chunks of information at a time. You might use:

AWS KMS: Encryption tool that works well with AWS

Azure Key Vault: Microsoft’s tool for keeping secrets safe

Chunked delivery: Break up sensitive content into smaller pieces

Pro Tip: Start with tools your team already knows. Harvard's success came partly from using Slack, which everyone was already comfortable with.

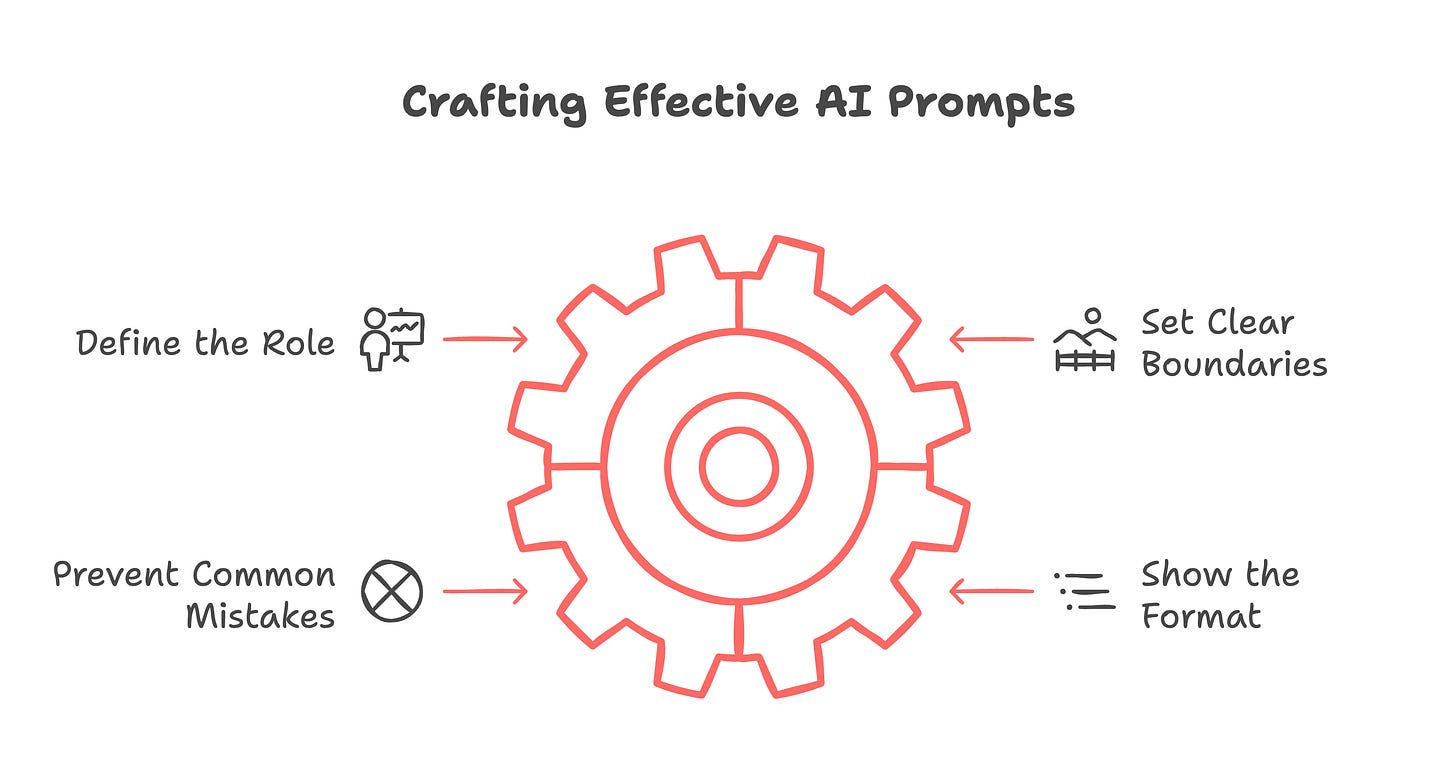

Week 3: The Killer Prompt Template

Harvard discovered that how you instruct your AI is crucial. Here's their winning formula broken down with real examples:

Basic Structure:

You are a [role] helping [audience] with [task].

Use ONLY [specific documents].

Never [common failure modes].

Format responses like [example].In the Example from Harvard:

You are a startup expert helping MBA students with case analysis.

Use ONLY the provided course materials and teaching notes.

Never speculate beyond the source material or provide generic advice.

Format responses with:

- Key insights first

- Supporting evidence from specific cases

- Source citations at the endHow to Build Your Version:

Define the Role Instead of vague instructions like "help with questions," be specific:

"You are a senior sales trainer helping new reps understand our products"

"You are a technical expert helping customers troubleshoot our software"

Set Clear Boundaries Tell your AI exactly what content to use:

"Use ONLY the Q4 2024 product specifications"

"Reference ONLY approved customer support scripts"

Prevent Common Mistakes List specific things your AI should avoid:

"Never share pricing information"

"Never recommend features that aren't in the official documentation"

Show the Format Give an example of how answers should look:

"Start with the solution, then explain why"

"Include step-by-step instructions when relevant"

Pro Tip: Test your prompt with different questions and refine it based on the responses. Harvard found that adding "cite your sources" dramatically improved user trust.

Week 4: Launch and Monitor

Choose Your Test Group Pick 10 team members who know your processes well and aren't shy about giving feedback. Include people from different departments and experience levels to get diverse perspectives.

Track Usage Patterns Watch what your team asks about most often and when they need help. Harvard learned that most users preferred private chats and often worked late at night. This helped them improve the system's availability and privacy features.

Get Better Fast Meet with users weekly to hear what works and what doesn't. Fix problems as soon as you spot them. Show your team how their feedback shapes improvements - they'll give you better suggestions when they see you're listening.

What Harvard Learned the Hard Way

Harvard hit four main roadblocks - and solved them in ways you can copy:

Too Much Jargon Students struggled with startup terms and acronyms. Adding a built-in glossary helped new students learn the language three times faster than before.

Privacy Worries Students didn't want others to see their questions. Making private chats, the default led to more people using the system - 68% of students jumped in when they knew their questions would stay private.

Answer Quality Students needed to trust the AI's answers. Adding source citations to every response helped - 40% of users rated the answers as high quality (4 or 5 out of 5).

Spotting Struggling Users The teaching team needed to know who needed help. A dashboard tracking student questions helped them identify 12 students who needed extra support before they fell behind.

The Results: What Actually Changed

Numbers tell stories, but Harvard's AI experiment revealed something deeper than metrics. Beyond the 70% drop in basic queries and the 3x faster learning rates, they discovered patterns in how future leaders actually learn when given 24/7 access to expertise. The insights challenge everything we thought we knew about scaling knowledge work.

How Teams Worked Better

Students who spoke English as a second language caught up quickly. Before the AI, it took them about three weeks to learn key startup terms. With the AI helping, they got comfortable in just one week.

Quiet students surprised everyone. Take "Jay" - he rarely spoke up in class, but the AI showed he was doing deep research at night. When called on, he knocked it out of the park.

The AI never slept. When a student had a 2 AM question about tomorrow's case study, they got help instantly instead of waiting for the morning.

How Leaders Got Smarter

Professors saw learning gaps they'd missed before. When multiple students asked about "founder market fit," they knew to spend more time on that topic in class.

Teams spent less time answering basic questions. Quick things like "When is this due?" dropped by 70%, giving professors more time for deeper discussions.

The system showed how students actually learned. Professors could see who prepped weeks ahead (like busy parent "Mary") and who needed help with specific topics.

Class discussions stayed lively. Students didn't just repeat AI answers - they came ready to debate and dive deeper.

What Harvard's AI Experiment Teaches Us

Think of Harvard's AI teaching assistant like that one team member who never sleeps, never complains about repeat questions, and somehow makes everyone else better at their jobs. Here's what made it work.

The Secret Sauce? It's Not What You Think

Remember the last time you hesitated to ask a "dumb question" in a meeting? Harvard's students felt the same way. But give people privacy, and watch what happens. When Harvard made questions private by default, usage exploded like free coffee at an all-hands meeting.

Their trick wasn't just answering questions – it was learning from them. Every late-night query became a window into what students really needed. Think of it like having a backstage pass to how people actually learn, not just how we think they do.

The Real Questions For Your Team

Before you jump on the AI bandwagon, ask yourself:

About Your Knowledge Flow Where's your team's brain trust hiding? Is it in endless email threads, that one person everyone depends on, or worse – walking out the door with every departure?

About Your Team's Habits How do your people really work? Not the 9-to-5 version, but the midnight oil, deadline-crunch reality. What questions keep coming up when people think no one's judging?

About Your Hidden Opportunities: What could your experts tackle if they weren't answering the same questions on repeat? What patterns in those questions might reveal about your training gaps?

The Bottom Line

Harvard didn't just build a chatbot – they created a mirror that showed them how learning actually happens. Their AI didn't replace teachers; it gave them superpowers to see where students struggled and soared.

The most valuable insight? Sometimes the best answers come from understanding why people ask the questions in the first place.

Harvard's success wasn't just about answering questions—it was about transforming how knowledge flows through an organization. The question now isn't whether AI can enhance your team's capabilities, but how quickly you'll adapt to this new reality of scaled expertise. The trick isn't teaching it everything – it's teaching it the right things.

"Want to spot tomorrow's leaders? Look for those who are already thinking about what questions AI should answer, not just what answers it should give."

Adapt & Create

Kamil

*While the 70% reduction is not explicitly cited, broader industry trends strongly support this figure as plausible. For institutions adopting similar AI tools, a 50–70% reduction in administrative emails would align with both educational and corporate benchmarks, assuming robust integration with existing workflows (e.g., Slack, Teams) and comprehensive training of the AI on administrative content

Share this post